ECFD workshop, 4th edition, 2021

Contents

- 1 Description

- 2 News

- 3 Objectives

- 4 Agenda

- 5 Thematics / Mini-workshops

- 5.1 Combustion - B. Cuenot, CERFACS

- 5.2 Static and dynamic mesh adaptation - G. Balarac, LEGI

- 5.3 Multi-phase flows - V. Moureau, CORIA

- 5.4 Numerics - G. Lartigue, CORIA

- 5.5 Turbulent flows - P. Bénard, CORIA

- 5.6 User experience - R. Mercier, SAFRAN TECH

- 5.7 Fluid structure interaction - S. Mendez, IMAG

- 5.8 GENCI Hackathon - G. Staffelbach, CERFACS

Description

- Virtual event from 22nd to 26th of March 2021

- Two types of sessions:

- common technical presentations: roadmaps, specific points.

- mini-workshops. Potential workshops are listed below.

- Free of charge

- More than 50 participants from academics (CERFACS, CORIA, IMAG, LEGI, UMONS, UVM, VUB), HPC center/experts (GENCI, IDRIS, NVIDIA, HPE) and industry (Safran, Ariane Group).

News

Annoncements on Linkedin

Objectives

- Bring together experts in high-performance computing, applied mathematics and multi-physics CFDs

- Identify the technological barriers of exaflopic CFD via numerical experiments

- Identify industrial needs and challenges in high-performance computing

- Propose action plans to add to the development roadmaps of the AVBP and YALES2 codes

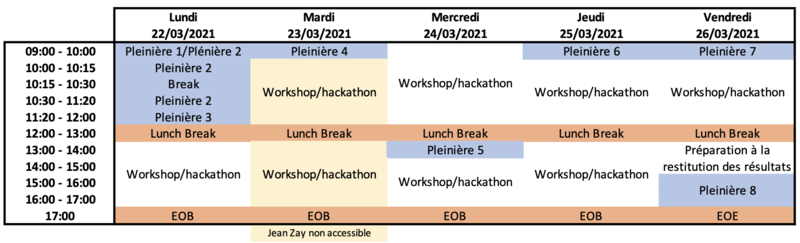

Agenda

Plénière 1

Lundi 22/03/2021 9h00-9h20

Introduction (organisation, agenda semaine, etc.)

V. Moureau (CORIA), G. Balarac (LEGI), C. Piechurski (GENCI)

Plénière 2

Lundi 22/03/2021 9h20-11h20

Présentation des projets du workshop et Présentation des thématiques du hackathon

Responsables de projets

Plénière 3

Lundi 22/03/2021 11h20-12h00

Contrat de Progrès Jean Zay: Véhicule d'accompagnement des utilisateurs au portage des applications sur les nouvelles technologies

P.-F. Lavallée (IDRIS)

Plénière 4

Mardi 23/03/2021 9h00-10h00

Evolution de la programmation GPU – CUDA, OpenACC, Standard Langages (C++, Fortran)

F. Courteille (NVIDIA)

Plénière 5

Mercredi 24/03/2021 13h00-14h00

Le portage applicatif sur GPU de AVBP et Yales 2: Concrêtement comment cela se matérialise?

G. Staffelbach (CERFACS) & V. Moureau (CORIA)

Plénière 6

Jeudi 25/03/2021 9h00-10h00

Approche et démarche pour accompagner le portage d'un code sur GPU NVIDIA

P.-E. Bernard (HPE)

Plénière 7

Vendredi 26/03/2021 9h00-10h00

Roadmaps YALES2 & AVBP

V. Moureau (CORIA) & N. Odier (CERFACS)

Plénière 8

Vendredi 26/03/2021 15h00-17h00

Wrap-up : présentation des résultats et conclusion générale

Responsables de projets + V. Moureau (CORIA)

Thematics / Mini-workshops

These mini-workshops may change and cover more or less topics. This page will be adapted according to your feedback.

Combustion - B. Cuenot, CERFACS

- H2 and alternative fuels combustion

- turbulent combustion modeling

Static and dynamic mesh adaptation - G. Balarac, LEGI

Participants: G. Balarac and M. Bernard (LEGI), Y. Dubief (Vermont U.), U. Vigny and L. Bricteux (Mons U.), A. Grenouilloux, S. Meynet and P. Bernard (CORIA), R. Mercier and J. Leparoux (Safran Tech), P. Mohanamuraly, G. Staffelbach and N. Odier (CERFACS))

Mesh adaptation is now an essential procedure to be able toi perform numerical simulations in complex geometries. The aim of mesh adaptation is to be able to define an "objective" mesh allowing the best compromise between accuracy and computational cost, with a reproducibility property, i.e. independent of the user. This project gathered thus six sub-projects related to static and dynamic mesh adaptation, with the main objectives to improve mesh adaptation capabilities of codes (sub-projects 1 and 2), to allow automatic mesh convergence (sub-projects 3 and 4), and to perform dynamic mesh adaptation for specific cases (sub-projects 5 and 6).

- Sub-project 1: Coupling TreeAdapt / AVBP (P. Mohanamuraly, G. Staffelbach)

The main objective of this sub-project was to couple the TreeAdapt library with the AVBP code. TreeAdapt is a library based on the partitioning library TreePart. This allows a hierarchical topology-aware massively parallel, online interface for unstructured mesh adaption. During the workshop the one-way coupling with AVBP has been performed with success and the two-way coupling has been started.

- Sub-project 2: New features in YALES2 (A. Grenouilloux, S. Meynet, M. Bernard, R. Mercier):

The main objectives of this sub-project was to develop in YALES2 (i) anisotropic mesh adaptation and (ii) a new partitioning algorithm for a more performant mesh adaptation procedure. To allow anisotropic mesh adaptation a new metric definition based on a tensor at cells has been proposed. The new partitioning has been developed to create halos around bad quality cells and to ensure contiguity.

- Sub-project 3: Criteria based on statistical quantities for static mesh adaptation in LES (G. Balarac, N. Odier, A. Grenouilloux):

The main objective of this sub-project was to develop a strategy for automatic mesh convergence based on statistical quantities. The proposed strategy is independent of the flwo case and of the user. It is defined to guarantee that the energy balance of the overall system is independent of the mesh. This strategy combine criteria already proposed by Benard et al. (2015) and Daviller et al. (2017).

- Sub-project 4: Automated Mesh Convergence plugin re-integration (R. Mercier, J. Leparoux, A. Grenouilloux):

The main objective of this sub-project was to integrate the Automated Mesh Convergence (AMC) plugin developed by Safran Tech in YALES2 distribution. This was done with success during the workshop. Moreover, additional criteria were integrated. In particular, the y_plus criterion from Duprat law (A. Grenouilloux PhD) was considered to be able to control cells size in boundary layers.

- Sub-project 5: Dynamic mesh adaptation for DNS/LES of isolated vortices (L. Bricteux, G. Balarac):

The main objective of this sub-project was to develop dynamic mesh adaptation strategy for simulation of isolated vortices, and to compare with DNS on static mesh, or with vortex methods. A well docuimented test case of a 2D vortice has been considered. Criteria based on the Palinstrophy have been proposed with success, allowing to perform simulation with dynamic mesh adaptation having the same accuracy as reference methods.

- Sub-project 6: Dynamic mesh adaptation for non-statistically stationary turbulence (U. Vigny, L. Bricteux, Y. Dubief, P. Benard):

The main objective of this sub-project was to test dynamic mesh adaptation strategies for flow configurations where statistical quantities are unavailable (conversely to SP3), and where various vortices on a broad range of scales exist (conversely to SP5). Various quantities based on velocity gradient, Q criterion, or passive scalar have been tested. But no unified strategy has been proposed yet. A procedure has been initiated based on a multiobjective genetic algorithm (GA) to identify the optimum dynamic mesh adaptation parameters to minimize computational cost and maximize solution quality.

Multi-phase flows - V. Moureau, CORIA

- scalar transport in two-phase flows

- three-phase flows: contact angle

Numerics - G. Lartigue, CORIA

Participants: Ghislain LARTIGUE and Vincent MOUREAU (CORIA), Manuel BERNARD and Guillaume BALARAC (LEGI), Nicolas ODIER and Benjamin MARTIN (CERFACS)

This project gathered four sub-projects related to Numerical Methods. Most of these activities are related to the use of high-order schemes presented in [1] in the context of Finite-Volumes Method.

- Sub-project 1 (N. Odier, B. Martin, G. Lartigue): The main objective of this sub-project was to implement a High-Order Finite-Volume method in the Cell-Vertex compressible code AVBP.

- Sub-project 2 (M. Bernard, G. Balarac, G. Lartigue): The main objective of this sub-project was to work on the use of High-Order Finite-Volume method to solve the Poisson Equation in the incompressible code YALES2.

In the context of projection method, a special attention needs to be paid to the accuracy of the coupling between pressure and velocity fields. To achieve this goal, the keystone is to be able to solve efficiently the Poisson problem for the pressure. During the workshop, we focused on resolution of a generic Poisson problem by use of conjugated gradient algorithm (CJ). Idea was to use, at each iteration of the CG, the high-order Laplacian operator recently developed on the basis of high-order schemes [1]. This high-order Laplacian operator shows a better accuracy than the classical one used in YALES2 (SIMPLEX [3]) However, its usage during conjugated gradient algorithm does not improve the accuracy of the solution of the Poisson problem. Further investigations are ongoing to evaluate the potential improvement on the correction of the velocity field with the pressure arising from the inversion of the high-order Laplacian operator.

- Sub-project 3 (G. Sahut, G. Balarac, G. Lartigue): The main objective of this sub-project was to implement a URANS method with a semi-implicit solver in YALES2.

- Sub-project 4 (G. Lartigue, V. Moureau): The main objective of this sub-project was to improve the precision and robustness of the Laplacian Operator in YALES2. There is two class of operators in YALES2: ROBUST (a.k.a. PAIR_BASED and IGNORE_SKEWNESS) and PRECISE (a.k.a. SIMPLEX). It has been shown that for operators with constant coefficients (as in ICS and VDS solvers), the PRECISE approach is unconditionally stable and must be used in all situations. However, in the SPS solver, the density variations across a pair of vertex can lead to a non-PSD operator. A major achievement of the workshop was to propose an hybrid operator that mixes both operators to achieve both precision and robustness. This operator will be implemented in a near future.

- Discussion (All): A two-hours discussion on Tuesday afternoon have been dedicated to the analysis of the paper [2]. This paper deals with an optimal way of mixing a robust low-order numerical scheme with high-order scheme. The major interest of this mixing technique is that it preserves the boundedness of the solution with a so-called convex-limiting. This is similar to WENO techniques but it relies on the resolution of the interface Riemann

[1] Manuel Bernard, Ghislain Lartigue, Guillaume Balarac, Vincent Moureau, Guillaume Puigt. A framework to perform high-order deconvolution for finite-volume method on simplicial meshes. International Journal for Numerical Methods in Fluids, Wiley, 2020, 92 (11), pp.1551-1583. [1] [2]

[2] Jean-Luc Guermond, Bojan Popov, Ignacio Tomas. Invariant domain preserving discretization-independent schemes and convex limiting for hyperbolic systems. Comput. Methods Appl. Mech. Engrg. 347 (2019) 143–175. [3]

[3] Ruben Specogna, Francesco Trevisan. A discrete geometric approach to solving time independent Schrödinger equation. Journal of Computational Physics 2011, 1370-1381. [4]

Turbulent flows - P. Bénard, CORIA

- turbulence injection

- wall modeling

- rotor modeling for wind or hydro turbines applications

- advanced post processing for unsteady turbulence

User experience - R. Mercier, SAFRAN TECH

- automation & workflows for HPC

- on-line and off-line analysis of massive datasets

Fluid structure interaction - S. Mendez, IMAG

Participants: Thomas Fabbri and Guillaume Balarac, LEGI, Barthélémy Thibaud and Simon Mendez, IMAG, Likhitha Ramesh Reddy and Axelle Viré, TU Delft and Pierre Bénard, CORIA

This project gathered three sub-projects related to fluid-structure interactions (FSI). Their common feature was the FSI solver from YALES2, which is based on a partitioned approach. The FSI solver couples an Arbitrary Lagrangian-Eulerian solver for predicting the fluid motion in a moving domain (FSI_ALE) and a solver for structural dynamics (FSI_SMS), which are both YALES2 solvers. The FSI solver has been initiated by Thomas Fabbri (LEGI, Grenoble) and the objectives of ECFD4 were to optimize it and generalize its use among several teams, by improving its performances, demonstrating its versatility and adding multiphysics effects. All the projects made interesting progree and will continue over the newt weeks/months.

- Sub-project 1 (Thomas Fabbri and Guillaume Balarac, LEGI): The aim of this sub-project was to decrease the time spent in computing the fluid grid deformation, which is currently the most expensive part of the calculation. The strategy is to solve a deformation field on a coarse mesh and apply it to a fine mesh after interpolation. Many pieces exist in YALES2 related to such a task (using several grids, performing interpolations...), but they are currently not appropriate for this application. The work performed during the workshop consisted in identifying the different subroutines of interest and start coding the method. Many parts of the method are functional and the next step is to properly compbine them and test its efficiency.

- Sub-project 2 (Barthélémy Thibaud and Simon Mendez, IMAG): The aim of this sub-project was to validate the FSI solver in the case of a flexible valve bent by a pulsatile flow. A proper workflow (sequence of runs) has been defined during the week to be able to run this simulation and the first results are extremely promising, with already fair comparisons with the reference results from the literature. This workshop has also contributed in enhacing the experience of the solver at IMAG.

- Sub-project 3 (Likhitha Ramesh Reddy and Axelle Viré, TU Delft and Pierre Bénard, CORIA): The long-term aim of this sub-project is to perform simulations of the flow around floating wind turbines, which constitutes a huge challenge, as it gathers the difficulties of wind tubines flows, two-phase flows, and fluid-structure interactions between a fluid and a solid. During the workshop, the aim was to progress on two aspects: the use of the two-phase flow solver of YALES2, SPS, in a moving domain (coupling SPS and ALE) and the coupling with FSI. Both tasks were tackled: preliminary validation simulations were performed for the SPS-ALE solver, and the strategy to couple the SPS-ALE solver with the FSI has been clearly identified within the group.

- Common work: TU Delft (Sub-project 3) needs to perform FSI without deformation of the structure, so that the coupling with the SMS solver may not be indispensable. Tests were performed to study the ability of the SMS to work in a regime of very stiff material to mimic rigid bodies, and first tests were very convincing. In the future however, it is planned to implement a rigid-body motion solver in YALES2 as an alternative to SMS. This task gathers the four teams of the project and is a clear shared objective of the next months.

- Bugs and cleaning: minor bugs were identified in the FSI solver, mostly related to options rarely used. There were corrected and pushed in the YALES2 gitlab.

- Documentation: the information shared between participants for the use and understanding of the SMS and FSI solvers has been directly gathered in the YALES2 wiki.

GENCI Hackathon - G. Staffelbach, CERFACS

Participants : V. Moureau (CORIA), P. Bégou (LEGI), J. Legaux, G. Staffelbach (CERFACS), L. Stuber, F. Courteille (NVIDIA), T. Braconnier, P.E Bernard (HPE).

GPU acceleration is the keystone towards exascale computing as evidenced by the top500 where two thirds of the top50 systems are now accelerated. Within this workshop the objective was to reevaluate the performance of both AVBP and YALES2 following their initial port under a contrat de progrés between GENCI and HPE with the support of IDRIS conducted in 2019. Then update as much as possible the codes to todays versions, assess new porting and optimisation possibilities and carry them out when possible.

YALES2 The YALES2 solver has evolved immensely since the 2019 port and most of the time was spent merging and updated the code to todays standards. An updated branch with the current source code has been released (*branch*) and profiling and optimisation tools have been tested on CORIA and LEGI platforms. In parallel, using the CVODE GPU-enabled library to accelerate the chemistry solver in YALES2 was investigated. This proved more complex than anticipated as the library did not build as is with the latest release of the NVIDIA SDK. This issue was promptly solved with the help of NVIDIA. Coupling YALES2 with the accelerated library seems to require more extensive knowledge in OpenACC and CUDA, the team is highly motivated to pursue this train of though and will probably participate to the IDRIS hackathon initiative in May 2020 to continue this effort.

AVBP Efforts to port AVBP to GPU have continued through an second grand challenge on the JEANZAY system targeting the port of a complex industrial type combustion chamber (DGENCC). In preparation for this workshop, the new models required for the DGENCC simulation were ported to GPU and performance analysis was undertaken. A new branch WIP/GC_JZ2 is currently available allowing for the accelerated simulation of this type of workflow. Under the guidance of NVIDIA and HPE, optimisation venues have been identified:

- removal of extended temporary arrays.

- remplacement of implicit vector assignements.

- Collapsable compute driven loops.

Integrating this efforts in some of the kernels has yieled a 4.2 acceleration between a full cpu compute node with 40 cascade lake cores and a the accelerated counter part using 4 NVIDIA V100 GPUs. Further more the case has been strong scaling tested up to 1024 gpus with excellent performance.