Difference between revisions of "Ecfd:ecfd 7th edition"

(→Projects) |

Tberthelon (Talk | contribs) (→Numerics - S. Mendez, IMAG & G. Balarac, LEGI) |

||

| Line 78: | Line 78: | ||

[1] : ''A framework to perform high-order deconvolution for finite-volume method on simplicial meshes, IJNMF 2020, Bernard et. al'' | [1] : ''A framework to perform high-order deconvolution for finite-volume method on simplicial meshes, IJNMF 2020, Bernard et. al'' | ||

| + | |||

| + | |||

| + | ==== N2: Implementation of linearised implicit time integration in ALE solver - T. Berthelon & G. Balarac (LEGI) ==== | ||

| + | |||

| + | An linearised implicit time integration has recently been developed in the incompressible solver of YALES2. This new integration scheme allows to use larger time-step that the ones constraints by classic stability criteria inherent to explicit time integration method. This allows to reduce the restitution time of Large Eddy Simulations [1]. | ||

| + | The objective of this project was to implement this new time integration in the ale solver in order to be able to reduce restitution time of moving mesh configuration. | ||

| + | |||

| + | The developments were validated on a scalar advection case and on a rotor-stator interaction case. Although the results seem to be in line with the explicit integration methods, the validation of the temporal convergence to 2nd order remains to be shown. | ||

| + | |||

| + | [1] Toward the use of LES for industrial complex geometries. Part II: Reduce the time-to-solution by using a linearised implicit time advancement, Berthelon et al., JoT, 2022 | ||

==== N5: Optimization of the RBC solver - F. Rojas & S. Mendez (IMAG) ==== | ==== N5: Optimization of the RBC solver - F. Rojas & S. Mendez (IMAG) ==== | ||

Revision as of 15:50, 5 February 2024

Contents

- 1 Description

- 2 Agenda

- 3 Thematics / Mini-workshops

- 4 Projects

- 4.1 Hackathon GENCI - P. Begou, LEGI

- 4.2 Mesh adaptation - R. Letournel, Safran

- 4.3 Numerics - S. Mendez, IMAG & G. Balarac, LEGI

- 4.3.1 N1: Treatment of boundary conditions for high-order schemes - M. Bernard & G. Balarac (LEGI), G. Lartigue (Total Energies)

- 4.3.2 N2: Implementation of linearised implicit time integration in ALE solver - T. Berthelon & G. Balarac (LEGI)

- 4.3.3 N5: Optimization of the RBC solver - F. Rojas & S. Mendez (IMAG)

- 4.3.4 N6: Electrodeformation of red blood cells, extension to 3D and improved accuracy at membrane - A. Spadotto & S. Mendez (IMAG), M. Bernard (LEGI)

- 4.4 Turbulence - P. Benard, CORIA & L. Bricteux, UMONS

- 4.5 Two Phase Flow - M. Cailler, Safran Tech & V. Moureau, CORIA

- 4.6 Combustion - K. Bioche, CORIA & R. Mercier, Safran

- 4.7 User Experience & Data - L. Korzeczek, GDTech

Description

- Event from 22th of January to 2nd of February 2024

- Location: Hôtel Club de la Plage, Merville-Franceville, near Caen (14)

- Two types of sessions:

- common technical presentations: roadmaps, specific points

- mini-workshops. Potential workshops are listed below

- Free of charge

- More than 70 participants from academics, HPC center/experts and industry.

- Objectives

- Bring together experts in high-performance computing, applied mathematics and multi-physics CFDs

- Identify the technological barriers of exaflopic CFD via numerical experiments

- Identify industrial needs and challenges in high-performance computing

- Propose action plans to add to the development roadmaps of the CFD codes

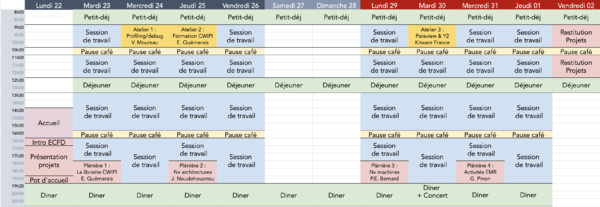

Agenda

Thematics / Mini-workshops

These mini-workshops may change and cover more or less topics. This page will be adapted according to your feedback.

To come...

Projects

Hackathon GENCI - P. Begou, LEGI

The GENCI Hackathon will be devoted to porting two CFD codes to the Mi250 GPUs of the Adastra supercomputer deployed by GENCI at CINES.

For the YALES2 code the goal is to obtain a first reference version giving the expected results then, if possible, to start its optimization to gain performance. The approach is OpenACC based with the objective of an implementation as least intrusive as possible in the existing code and which remains portable with the work done on the Nvidia GPUs of the Jean-Zay supercomputer at IDRIS.

The porting of the AVBP code is more advanced with a prototype already functional on Adastra but "hard-coded". The objective is to rationalize this first implementation, to integrate the latest developments in the code, to centralize memory management (host and device), to work on porting the Lagrangian part of the code and, of course, to improve the global performance.

This Hackathon is supported by GENCI, HPE, AMD and CINES with the presence on site of several development experts on AMD GPUS.

Mesh adaptation - R. Letournel, Safran

M1: ASMR for reheat chamber applications - Paul Pouech (CERFACS), Thibault Duranton, Luis Carbajal Carrasco (Safran)

Combustion in reheat chambers feature a wide range of lenght scales. Mesh refinement is thus mandatory to capture the flow characteristics within a reasonnable CPU cost for LES computations using the AVBP code. The purpose of this project is to consolidate mesh refinement criteria and strategy in an academic reference case. The retained workflow is supported by the Lemmings code that calls the Tékigô wrapper for the mesh adaptations. During the ECFD7, the convergence time needed to have significant distribution of quantities of interest was analysed. An optimum runtime, based on a characteristic flow time-scale, was thus identified and led to a reduced running time for each adaptation step. As a second step, discussions with the ECFD7 participants led to the identification of interesting refinement criteria, namely the flame sensor or the mach rms for instance. Parametric analysis showed the robustness of the workflow based on a ponderation of different criteria. Finally, in order to facilitate the use of the workflow, efforts were made to improve the user experience by making it more human readable.

M2: Parallel remeshing - B. Andrieu, C. Benazet, K. Hoogveld, B. Maugars, E. Quémerais (ONERA)

Mesh adaptation is a crucial tool in order to automate industrial RANS numerical simulations. To meet this need, we need to carry out mesh adaptation as quickly as possible by setting up an efficient, parallel solution. To this end, we have explored two avenues: a parallel edge-splitting algorithm that has recently been initiated in the ParaDiGM library, and a solution based on the refine library for adapting meshes with MPI implementation. On the one hand, we fixed several bugs in our split operator, and validated it on test cases of increasing complexity with a node-centered solver. In addition, we've added interfaces to refine so as to avoid using files, and call directly in library mode. We also investigated geometric projection issues during the mesh adaptation procedure, notably by looking at solutions such as EGADS, which offers a simplified API for CAD interrogation. We finally implemented metric gradation (in serial), metric intersection and complexity computations. All the ingredients we've tested give us a clearer picture of the entire mesh adaptation process.

Numerics - S. Mendez, IMAG & G. Balarac, LEGI

N1: Treatment of boundary conditions for high-order schemes - M. Bernard & G. Balarac (LEGI), G. Lartigue (Total Energies)

In the context of Finite Volumes Method, spacial accuracy of a numerical scheme depends on ability to evaluate accurately fluxes through interface of each control volume (CV). Such accurate evaluation is not straightforward, especially when dealing with distorted grids. This project follows the work of [1] where fluxes use pointwise quantities, which are reconstructed from integrated quantities advanced in time. During the workshop, task force was dedicated to the treatment of **inlet** boundary conditions (BC) and **non-planar walls**. For inlet BC, the key resides in the spatial integration of convective flux over discrete faces of the CV touching the boundary. Such treatment lead to exact integration for linear inlet profile and large error reduction on other profiles. Concerning non-planar walls, the strategy adopted consists in the enforcement of the BC on each discrete face, by modifying the normal component of the wall gradient in order to evaluate accurately the diffusive flux. Again, a large reduction of this error has been observed.

[1] : A framework to perform high-order deconvolution for finite-volume method on simplicial meshes, IJNMF 2020, Bernard et. al

N2: Implementation of linearised implicit time integration in ALE solver - T. Berthelon & G. Balarac (LEGI)

An linearised implicit time integration has recently been developed in the incompressible solver of YALES2. This new integration scheme allows to use larger time-step that the ones constraints by classic stability criteria inherent to explicit time integration method. This allows to reduce the restitution time of Large Eddy Simulations [1]. The objective of this project was to implement this new time integration in the ale solver in order to be able to reduce restitution time of moving mesh configuration.

The developments were validated on a scalar advection case and on a rotor-stator interaction case. Although the results seem to be in line with the explicit integration methods, the validation of the temporal convergence to 2nd order remains to be shown.

[1] Toward the use of LES for industrial complex geometries. Part II: Reduce the time-to-solution by using a linearised implicit time advancement, Berthelon et al., JoT, 2022

N5: Optimization of the RBC solver - F. Rojas & S. Mendez (IMAG)

N6: Electrodeformation of red blood cells, extension to 3D and improved accuracy at membrane - A. Spadotto & S. Mendez (IMAG), M. Bernard (LEGI)

The Leaky Dielectric Model is a popular framework to describe electric stresses over micro-scale membranes. We have adopted it to simulate the effect of a DC electric field on a red blood cell using the YALES2BIO solver. The goal of the project is to reproduce the electric charging process of the membrane, as well as the resulting stresses, which may yield to electrodeformation of the cell. From the point of view of the implementation, the grid is represented by a 2D surface mesh embedded in a 3D eulerian grid. The need to make variables stored on the surface interact with quantities stored on the Eulerian grid calls for a proper bidirectional 2D-membrane/3D-grid dynamic connectivity. The advancement of theis task during this ECFD has led to the first 3D simulation of a charging fixed spherical shell. Moreover, the estimation of grid variables on elements cut by the membrane has been improved thanks to a High-Order extrapolation. The latter has been successfully tested on 2D configurations. The project opens the way for a series of validation tests. In particular, future work will demand treatment of instabilities emerging in symmetrical configurations.

Turbulence - P. Benard, CORIA & L. Bricteux, UMONS

T4: Atmospheric solver

Wind turbines, bigger and bigger, are now influenced by atmospheric flows. An atmospheric solver has already been developed in YALES2 to represents some of its effects (Coriolis, veer, thermal stratification). In this continuum, the project has been divided into two work-packages. - Work-package 1: The use of the Variable density solver (VDS). Before ECFD7, thermal stratification was taken into account using the Boussinesq buoyancy approximation within the incompressible solver framework. Now, VDS can be used, taking into account all thermal effect. Results are promissing. - Work-package 2: Wall law velocity filtering. Wall law are using velocity at the first grid node to compute wall shear stress. Before ECFD7, atmospheric wall law were using the local velocity, leading sometimes to convergence errors. Now a gather-scatter filter can be used to average velocity (and temperature) at first grid node.

Two Phase Flow - M. Cailler, Safran Tech & V. Moureau, CORIA

P3: Blood platelets adhesion model - C. Raveleau, S. Mendez, F. Nicoud (IMAG)

Medical devices in contact with blood (e.g. artificial valves) are used to treat various cardiovascular diseases, but their thrombogenicity remains the main unresolved issue in their development. A numerical model of blood platelets is being constructed to help to understand the effect of microstructuration on the thrombogenicity of artificial surface. The Force Coupling Method (FCM) was previously implemented and allows the modelisation of ellipsoidal particle and their interaction with the surrounding fluid. During the workshop, the particle model was extended to include adhesive and repulsive interactions with walls or with other particles. The adhesive bonds are modeled with springs forming when the distance between a node of a particle surface and a node of the wall or another particle is smaller than a given threshold. The stiffness of the bond is increased after a given formation time to mimic the 2-step adhesion process of platelets to von Willebrand Factor. A Lennard-Jones potential was used to model the collision of particles. Future work will aim at generalizing these implementations for an arbitrary number of particles (currently only working for 2 particles) and ensuring the interactions are unaltered by the crossing of a periodic boundary.

Combustion - K. Bioche, CORIA & R. Mercier, Safran

User Experience & Data - L. Korzeczek, GDTech

U4 : CWIPI 1.0 porting - N. Dellinger, B. Andrieu, K. Hoogveld, E. Quémerais (ONERA), A. Grenouilloux (CORIA), R. Letournel (Safran Tech)

Coupling is a cornerstone of numerical simulation, especially for addressing multi-physics problems using highly-specialized solvers for each phenomenon. The CWIPI library, developed at ONERA for coupling codes in a massively parallel environment, has been used in YALES2 for many years for internal and external coupling. Significant developments have been carried out in recent years to improve the performance and usability of CWIPI, resulting in the release of version 1 in july 2023. This version features a completely revised API to overcome the limitations of version 0.12 and offer more possibilities to users. The goal of this project was to support users in their transition to version 1. A training course based on Jupyter Notebooks was first organized. Assistance was then provided to successfully port MoDeTheC's and YALES2's internal couplings to the new version. Some fixes were made in CWIPI along the way, and will be reported in a new patched version.