Difference between revisions of "Ecfd:ecfd 7th edition"

(→N4: Non-uniform outlet pressure and coupling with CWIPI - J. B. Lagaert (LMO), Y. Lakrifi, T. Berthelon, G.Balarac (LEGI) & R. Letournel (Safran)) |

(→Turbulence - P. Benard, CORIA & L. Bricteux, UMONS) |

||

| (19 intermediate revisions by 4 users not shown) | |||

| Line 65: | Line 65: | ||

Mesh adaptation is a crucial tool in order to automate industrial RANS numerical simulations. To meet this need, we need to carry out mesh adaptation as quickly as possible by setting up an efficient, parallel solution. To this end, we have explored two avenues: a parallel edge-splitting algorithm that has recently been initiated in the ParaDiGM library, and a solution based on [https://github.com/nasa/refine the refine library] for adapting meshes with MPI implementation. On the one hand, we fixed several bugs in our split operator, and validated it on test cases of increasing complexity with a node-centered solver. In addition, we've added interfaces to refine so as to avoid using files, and call directly in library mode. We also investigated geometric projection issues during the mesh adaptation procedure, notably by looking at solutions such as EGADS, which offers a simplified API for CAD interrogation. We finally implemented metric gradation (in serial), metric intersection and complexity computations. All the ingredients we've tested give us a clearer picture of the entire mesh adaptation process. | Mesh adaptation is a crucial tool in order to automate industrial RANS numerical simulations. To meet this need, we need to carry out mesh adaptation as quickly as possible by setting up an efficient, parallel solution. To this end, we have explored two avenues: a parallel edge-splitting algorithm that has recently been initiated in the ParaDiGM library, and a solution based on [https://github.com/nasa/refine the refine library] for adapting meshes with MPI implementation. On the one hand, we fixed several bugs in our split operator, and validated it on test cases of increasing complexity with a node-centered solver. In addition, we've added interfaces to refine so as to avoid using files, and call directly in library mode. We also investigated geometric projection issues during the mesh adaptation procedure, notably by looking at solutions such as EGADS, which offers a simplified API for CAD interrogation. We finally implemented metric gradation (in serial), metric intersection and complexity computations. All the ingredients we've tested give us a clearer picture of the entire mesh adaptation process. | ||

| − | ==== M3: Anisotropic mesh refinement - R. Barbera, G. Ghigliotti, G. Balarac (LEGI), R. Letournel (Safran) ==== | + | ==== M3: Anisotropic mesh refinement - R. Barbera (LEGI/Safran), G. Ghigliotti, G. Balarac (LEGI), R. Letournel (Safran) ==== |

Mesh adaptation is now a key feature for simulations of complex industrial flows. For transient flows such as multiphase and/or reactive flows, where regions of interest are strongly moving in space, dynamic mesh adaptation appears as the most suitable strategy. This strategy is now widely used in YALES2 based on isotropic mesh definition. The purpose of this project is to adapt this strategy to an anisotropic framework to reduce the overall simulation costs (in term of memory consumption, cpu cost and time to solution). In order to be able to handle multiphase flows, the main objective of the project is to study the conditions for accurately describing the dynamics of the level-set function with an anisotropic mesh. Accuracy is mainly assessed in terms of interface position and mass conservation. The inaccuracy of mass conservation is mainly due to interpolation errors after the adaptation step. Furthermore, inaccuracy in interface position may be due to misalignment between the anisotropic mesh elements and the interface normal. The first methodological corrections have been proposed, as an adaptation of the level-set reinitialization algorithm to the anisotropic mesh. | Mesh adaptation is now a key feature for simulations of complex industrial flows. For transient flows such as multiphase and/or reactive flows, where regions of interest are strongly moving in space, dynamic mesh adaptation appears as the most suitable strategy. This strategy is now widely used in YALES2 based on isotropic mesh definition. The purpose of this project is to adapt this strategy to an anisotropic framework to reduce the overall simulation costs (in term of memory consumption, cpu cost and time to solution). In order to be able to handle multiphase flows, the main objective of the project is to study the conditions for accurately describing the dynamics of the level-set function with an anisotropic mesh. Accuracy is mainly assessed in terms of interface position and mass conservation. The inaccuracy of mass conservation is mainly due to interpolation errors after the adaptation step. Furthermore, inaccuracy in interface position may be due to misalignment between the anisotropic mesh elements and the interface normal. The first methodological corrections have been proposed, as an adaptation of the level-set reinitialization algorithm to the anisotropic mesh. | ||

| Line 92: | Line 92: | ||

[1] ''Toward the use of LES for industrial complex geometries. Part II: Reduce the time-to-solution by using a linearised implicit time advancement, Berthelon et al., JoT, 2023'' | [1] ''Toward the use of LES for industrial complex geometries. Part II: Reduce the time-to-solution by using a linearised implicit time advancement, Berthelon et al., JoT, 2023'' | ||

| + | |||

| + | ==== N3: Parallelisation of Actuator Line Method - H. Mulakaloori (CORIA), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE) ==== | ||

| + | |||

| + | The implementation of the Actuator Line Method (ALM) into the YALES2 library leads to poor performances when many wind turbine rotors are set. Indeed, each rotor object is a derived type treated sequentially by all the processors participating to the computation. With 30 turbines in a computation, the return time is increased by 70% while the arithmetic intensity appears to be low. The objective of this sub-project is to improve the computation performances of the ALM already identified: (i) Assign one MPI communicator by rotor object gathering the processors close to the turbine and set-up a master/slave processus by communicator. This will allow the simultaneous rotors computation and reduce the number of MPI exchanges. (ii) Work on the domain decomposition to limit the number of processors attributed to each turbine. This would reduce or even eliminate MPI communications. | ||

==== N4: Non-uniform outlet pressure and coupling with CWIPI - J. B. Lagaert (LMO), Y. Lakrifi, T. Berthelon, G.Balarac (LEGI) , R. Letournel (Safran) ==== | ==== N4: Non-uniform outlet pressure and coupling with CWIPI - J. B. Lagaert (LMO), Y. Lakrifi, T. Berthelon, G.Balarac (LEGI) , R. Letournel (Safran) ==== | ||

| Line 97: | Line 101: | ||

In simulations, artificial boundaries need to be introduced due to the limited size of computational domains. At these boundaries, flow variables need to be calculated in a way that will not induce any perturbation of the interior solution. During ECFD#7, a generic outlet boundary condition defined from non-uniform pressure has been implemented in Yales2. This non-uniform pressure can de determined from a traction model (null or advected from the interior domain, for example). This non-uniform pressure can also be deducted through a coupling between two simulations. In this case a coupling via CWIPI is performed where the velocity and the pressure are exchanged at the common boundary to define the inlet and outlet conditions, respectively. | In simulations, artificial boundaries need to be introduced due to the limited size of computational domains. At these boundaries, flow variables need to be calculated in a way that will not induce any perturbation of the interior solution. During ECFD#7, a generic outlet boundary condition defined from non-uniform pressure has been implemented in Yales2. This non-uniform pressure can de determined from a traction model (null or advected from the interior domain, for example). This non-uniform pressure can also be deducted through a coupling between two simulations. In this case a coupling via CWIPI is performed where the velocity and the pressure are exchanged at the common boundary to define the inlet and outlet conditions, respectively. | ||

| − | ==== N5: Optimization of the RBC solver - F. Rojas | + | ==== N5: Optimization of the RBC solver - F. Rojas, S. Mendez (IMAG) ==== |

In the study of blood diseases, the mechanical behaviour of Red Blood Cells (RBCs) is one of the most relevant effects to take into account in the numerical models but also in experimental setups. Our system of interest is the thin gap of a rheometer where RBC suspensions are placed to explore their properties. To interpret the experimental data, the simulations of large suspensions of RBC are required to determine the blood’s microstructure (spatial arrangement of cells) and its rheological properties. | In the study of blood diseases, the mechanical behaviour of Red Blood Cells (RBCs) is one of the most relevant effects to take into account in the numerical models but also in experimental setups. Our system of interest is the thin gap of a rheometer where RBC suspensions are placed to explore their properties. To interpret the experimental data, the simulations of large suspensions of RBC are required to determine the blood’s microstructure (spatial arrangement of cells) and its rheological properties. | ||

| Line 107: | Line 111: | ||

We thank Ghislain Lartigue and Renaud Mercier for helpful discussions. | We thank Ghislain Lartigue and Renaud Mercier for helpful discussions. | ||

| − | ==== N6: Electrodeformation of red blood cells, extension to 3D and improved accuracy at membrane - A. Spadotto | + | ==== N6: Electrodeformation of red blood cells, extension to 3D and improved accuracy at membrane - A. Spadotto, S. Mendez (IMAG), M. Bernard (LEGI) ==== |

The Leaky Dielectric Model is a popular framework to describe electric stresses over micro-scale membranes. We have adopted it to simulate the effect of a DC electric field on a red blood cell using the YALES2BIO solver. The goal of the project is to reproduce the electric charging process of the membrane, as well as the resulting stresses, which may yield to electrodeformation of the cell. From the point of view of the implementation, the grid is represented by a 2D surface mesh embedded in a 3D eulerian grid. The need to make variables stored on the surface interact with quantities stored on the Eulerian grid calls for a proper bidirectional 2D-membrane/3D-grid dynamic connectivity. The advancement of theis task during this ECFD has led to the first 3D simulation of a charging fixed spherical shell. Moreover, the estimation of grid variables on elements cut by the membrane has been improved thanks to a High-Order extrapolation. The latter has been successfully tested on 2D configurations. The project opens the way for a series of validation tests. In particular, future work will demand treatment of instabilities emerging in symmetrical configurations. | The Leaky Dielectric Model is a popular framework to describe electric stresses over micro-scale membranes. We have adopted it to simulate the effect of a DC electric field on a red blood cell using the YALES2BIO solver. The goal of the project is to reproduce the electric charging process of the membrane, as well as the resulting stresses, which may yield to electrodeformation of the cell. From the point of view of the implementation, the grid is represented by a 2D surface mesh embedded in a 3D eulerian grid. The need to make variables stored on the surface interact with quantities stored on the Eulerian grid calls for a proper bidirectional 2D-membrane/3D-grid dynamic connectivity. The advancement of theis task during this ECFD has led to the first 3D simulation of a charging fixed spherical shell. Moreover, the estimation of grid variables on elements cut by the membrane has been improved thanks to a High-Order extrapolation. The latter has been successfully tested on 2D configurations. The project opens the way for a series of validation tests. In particular, future work will demand treatment of instabilities emerging in symmetrical configurations. | ||

| − | ==== N7: Optimisation Dorothy - M. Roperch | + | ==== N7: Optimisation Dorothy - M. Roperch, G. Pinon (LOMC), B. Gaston (CRIANN), P. Benard (CORIA) ==== |

Dorothy is a Lagrangian code using the particle vortex method. This method must have a homogeneous distribution of particles in space. To achieve this, at regular intervals during the simulation a Cartesian grid with new particles is created. The weights of the old particles are interpolated for each of the new particles. Before ECFD7, all the processors knew the general grid and the new particles. The aim of ECFD was to parallelize this module to avoid memory problem. To do this, each processor creates a grid corresponding to the particles it knows. They then exchange data on the supperposition zones. This solves the issue because the quantity of new particles known is smaller. During ECFD7, a trial on a ring vortex case was successfully carried out to test domain communications and supperposition. The next step will be to implement this new method in the Dorothy code. | Dorothy is a Lagrangian code using the particle vortex method. This method must have a homogeneous distribution of particles in space. To achieve this, at regular intervals during the simulation a Cartesian grid with new particles is created. The weights of the old particles are interpolated for each of the new particles. Before ECFD7, all the processors knew the general grid and the new particles. The aim of ECFD was to parallelize this module to avoid memory problem. To do this, each processor creates a grid corresponding to the particles it knows. They then exchange data on the supperposition zones. This solves the issue because the quantity of new particles known is smaller. During ECFD7, a trial on a ring vortex case was successfully carried out to test domain communications and supperposition. The next step will be to implement this new method in the Dorothy code. | ||

| Line 150: | Line 154: | ||

==== T6: Development of coupling between YALES2-OpenFAST – A. Parinam (TUDelft/CORIA), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE) ==== | ==== T6: Development of coupling between YALES2-OpenFAST – A. Parinam (TUDelft/CORIA), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE) ==== | ||

| + | Aero-servo-elastic engineering solvers, commonly used in the wind energy industry for structural response and power assessments, are unsuited for wake simulations, as aerodynamic loads are usually derived from a BEM-like method. To tackle this, the target is to couple the YALES2 library to OpenFAST, an NREL code for wind turbines, in the same way as the already existing YALES2-BHawC coupling. | ||

| + | An external coupling library has been created, linking the YALES2 and OpenFAST libraries and enabling the exchange of information between the data structures of each code. This data exchange has been tested and validated during the ECFD7. The next steps rely on exchanging the proper data during the actuator line tilmestep and further validate the coupling. | ||

| − | ==== T7: Confidence intervals for estimators – C. Papagiannis | + | ==== T7: Confidence intervals for estimators – C. Papagiannis, G.Balarac (LEGI), R. Letournel (Safran) ==== |

| + | |||

| + | The inference of statistics for the results of LES requires quantifying the quality of the estimates from the available sample. In the case of CFD the sample is the number of measurements of a QoI and is very closely connected to the simulation's time step. Since our computational resources are finite, the sampling error of the estimates will never vanish. The purpose of this project is to provide Confidence Intervals (CI) to the inferred statistics so the user can have an indicator of the quality level of the simulation 'on-the-fly'. This requires the calculation of the autocorrelation of the collected samples, to correct the estimated sample variance used for the CI. This was achieved through a ''selective autocorrelation function estimator'' that also takes into account the non-constant time steps. With this we calculated sample-size independent confidence intervals that provide the corrected variance, compared to a naive estimation of the sample variance that assumed the samples as fully uncorrelated. With this we pave the way for having a universal estimator for the autocorrelation of some QoI, that incorporates autocorrelations and cross-correlations with the time-step. | ||

=== Two Phase Flow - M. Cailler, Safran & V. Moureau, CORIA === | === Two Phase Flow - M. Cailler, Safran & V. Moureau, CORIA === | ||

| Line 176: | Line 184: | ||

==== P4: vWF Unfolding - C. Raveleau, S. Mendez, F. Nicoud (IMAG) ==== | ==== P4: vWF Unfolding - C. Raveleau, S. Mendez, F. Nicoud (IMAG) ==== | ||

| − | ==== P5: Towards even more efficient particle algorithms - M. Helal (CORIA | + | Under certain circumstances, platelets do not bind to the surface but to a specific protein called Willebrand factor, which has the unique property of unfolding once subjected to a sufficiently high shear flow. The aim of this project was to investigate how to represent this mechanism within the YALES2 framework. During ECFD7, the immersed-boundary methodology already used to treat thin membranes (such as the red blood cell membrane) was extended to cover 1D elements evolving in a 3D flow. Preliminary tests have been successfully carried out, notably on a embedded beam immersed in shear flow, showing the potential of this approach to include another ingredient relevant to thrombosis modeling. Future work will include adding a repulsive force to avoid non-physical binding, as well as carrying out simulations involving Willebrand factor, platelets and red blood cells. |

| + | |||

| + | ==== P5: Towards even more efficient particle algorithms - M. Helal (CORIA/Safran), M. Cailler (Safran) ==== | ||

Lagrangian particles are widely used in the YALES2 plateform to model: liquid spray, granular flow, two-phase flows with SPH approach or solids in IB method. | Lagrangian particles are widely used in the YALES2 plateform to model: liquid spray, granular flow, two-phase flows with SPH approach or solids in IB method. | ||

| Line 186: | Line 196: | ||

==== P6: Two fluid and phase change in PCS - C. Merlin (Ariane Group), J. Carmona (CORIA), V. Moureau (CORIA) ==== | ==== P6: Two fluid and phase change in PCS - C. Merlin (Ariane Group), J. Carmona (CORIA), V. Moureau (CORIA) ==== | ||

| − | ==== P8: Wall liquid film numerical model - N. Gasnier (EM2C | + | ==== P8: Wall liquid film numerical model - N. Gasnier (EM2C/Safran), J. Leparoux (Safran), J. Carmona (CORIA) ==== |

Wall liquid films are likely to be formed when fuel sprays impact the walls of aeronautical fuel injection systems. Such phenomenon may have a significant influence on the whole combustion process, however the small scales involved prevent from performing high fidelity simulations of film flows in the context of industrial geometries. Therefore, a low order model is required to model the dynamics of thin liquid flows under the action of spray droplets and of a turbulent gas shear. During ECFD7, a liquid film numerical model accounting for the influence of surface tension as well as gas shear, and based on the 2-dimensional Shallow Water Equations was implemented in Yales2. This model was then coupled to an algorithm ensuring a proper transition between fully resolved liquid structures (levelset) and film model during liquid droplet impacts on a solid wall. | Wall liquid films are likely to be formed when fuel sprays impact the walls of aeronautical fuel injection systems. Such phenomenon may have a significant influence on the whole combustion process, however the small scales involved prevent from performing high fidelity simulations of film flows in the context of industrial geometries. Therefore, a low order model is required to model the dynamics of thin liquid flows under the action of spray droplets and of a turbulent gas shear. During ECFD7, a liquid film numerical model accounting for the influence of surface tension as well as gas shear, and based on the 2-dimensional Shallow Water Equations was implemented in Yales2. This model was then coupled to an algorithm ensuring a proper transition between fully resolved liquid structures (levelset) and film model during liquid droplet impacts on a solid wall. | ||

| Line 193: | Line 203: | ||

Ceramic core displacement and deformation during the casting process is a major source of cooled blades manufacturing scrap. A possible source of core deformation may be the fluidic forces due to the filling of the mold with the liquid alloy. Predictive numerical simulations of the casting process would be an essential asset to increase the efficiency of the conception and industrial processes. During the workshop, a numerical methodology to simulate the filling process was drawn, with several modelling levels (with or without surface tension and slipping-wall conditions), in order to estimate the relevance of each of these models. Numerical results were then compared to available experimental results. Numerical deformation of the core was approximated as a beam flexion. Despite this post-processing approximation, the correlation between experimental measurements and numerical simulations is satisfying. The evolution of the core displacement with the inlet velocity of the fluid also has the same behaviour in the experiments and in the simulation. Future work will aim at including the dynamic contact angles in the simulations, in order to evaluate the relevance of this finer modelling, as well as correlating simulations with experiments on cases more representative of the industrial process. | Ceramic core displacement and deformation during the casting process is a major source of cooled blades manufacturing scrap. A possible source of core deformation may be the fluidic forces due to the filling of the mold with the liquid alloy. Predictive numerical simulations of the casting process would be an essential asset to increase the efficiency of the conception and industrial processes. During the workshop, a numerical methodology to simulate the filling process was drawn, with several modelling levels (with or without surface tension and slipping-wall conditions), in order to estimate the relevance of each of these models. Numerical results were then compared to available experimental results. Numerical deformation of the core was approximated as a beam flexion. Despite this post-processing approximation, the correlation between experimental measurements and numerical simulations is satisfying. The evolution of the core displacement with the inlet velocity of the fluid also has the same behaviour in the experiments and in the simulation. Future work will aim at including the dynamic contact angles in the simulations, in order to evaluate the relevance of this finer modelling, as well as correlating simulations with experiments on cases more representative of the industrial process. | ||

| − | ==== P10: Velocity regularization for Euler-Lagrange conversion - I. El Yamani (CORIA | + | ==== P10: Velocity regularization for Euler-Lagrange conversion - I. El Yamani (CORIA/Safran), M. Cailler (Safran), L. Voivenel, J. Carmona (CORIA) ==== |

The Euler Lagrange multi scale approach aims to reduce the computational costs when simulating two phase flow. To reduce the cost even more, more droplets have to be converted in the Lagrangian formalism where droplets are seen as point forces. It implies that droplets can not always check the hypothesis of the LPP (Lagrangian Particle Point) formalism which is that the diameter of the particle has to be much smaller than the cell size. This hypothesis allows to have a good approximation of the undisturbed velocity for the Lagrangian particle. If the hypothesis is not checked when a Eulerian droplet is converted into a Lagrangian particle a residual velocity field can exists and therefore the velocity given to the particle is impacted by itself. This project aims to filter the gaseous velocity field through a gaussian filtering to remove the contribution of the Eulerian droplet to better approximate the undisturbed velocity. | The Euler Lagrange multi scale approach aims to reduce the computational costs when simulating two phase flow. To reduce the cost even more, more droplets have to be converted in the Lagrangian formalism where droplets are seen as point forces. It implies that droplets can not always check the hypothesis of the LPP (Lagrangian Particle Point) formalism which is that the diameter of the particle has to be much smaller than the cell size. This hypothesis allows to have a good approximation of the undisturbed velocity for the Lagrangian particle. If the hypothesis is not checked when a Eulerian droplet is converted into a Lagrangian particle a residual velocity field can exists and therefore the velocity given to the particle is impacted by itself. This project aims to filter the gaseous velocity field through a gaussian filtering to remove the contribution of the Eulerian droplet to better approximate the undisturbed velocity. | ||

| Line 202: | Line 212: | ||

To reduce the expensive computational cost of Plasma-Assisted Combustion (PAC) full 3D simulations, the EM2C laboratory has developed phenomenological approaches to model Nanosecond Repetitively Pulsed (NRP) plasma discharges in reacting flows (Castela 2016 & Blanchard 2023). As part of previous works and ECFDs, both models were implemented and validated in the Low-Mach number framework (YALES2-VDS). While they were also implemented in the Compressible framework (YALES2-ECS), the validation against existing measurements or computations remained. During the workshop, numerical simulations of pin-to-pin configurations were performed with different numerical schemes and reactive mixtures to validate both models in ECS. The energy deposition was relatively well-validated through 2D simulations in the conditions of Castela et al. CNF 2016 and Rusterholtz et al. JPhysD 2013. A glimpse of baroclinic instabilities was observed through 3D simulations in the conditions of Castela et al. PROCI 2017. | To reduce the expensive computational cost of Plasma-Assisted Combustion (PAC) full 3D simulations, the EM2C laboratory has developed phenomenological approaches to model Nanosecond Repetitively Pulsed (NRP) plasma discharges in reacting flows (Castela 2016 & Blanchard 2023). As part of previous works and ECFDs, both models were implemented and validated in the Low-Mach number framework (YALES2-VDS). While they were also implemented in the Compressible framework (YALES2-ECS), the validation against existing measurements or computations remained. During the workshop, numerical simulations of pin-to-pin configurations were performed with different numerical schemes and reactive mixtures to validate both models in ECS. The energy deposition was relatively well-validated through 2D simulations in the conditions of Castela et al. CNF 2016 and Rusterholtz et al. JPhysD 2013. A glimpse of baroclinic instabilities was observed through 3D simulations in the conditions of Castela et al. PROCI 2017. | ||

| + | |||

| + | ==== C3: Dynamic sub-grid-scale wrinkling model for diffusion flames - S. Dillon (EM2C/Safran), R. Mercier (Safran), E. Espada, B. Fiorina, D. Veynante (EM2C) ==== | ||

| + | |||

| + | Large-eddy-simulation (LES) of reactive flows is widely used in both academic and industrial applications. Combustion phenomena occur at a scale often smaller than the LES mesh size, therefore, turbulent combustion models are required to account for unresolved turbulent flame interactions. The modeling of sub-grid-scale (SGS) flame turbulence interactions can be described with a flame surface wrinkling factor which measures the ratio of the total flame surface area to the resolved flame surface area. Flame surface wrinkling models are often expressed by assuming equilibrium between turbulent motions and flame surface wrinkling, however, in realistic burners non-equilibrium is present and dynamic models are needed to adapt model parameters. Fractal-like models require information about the outer and inner cut-off length scales along with a fractal exponent, which is determined dynamically from resolved scales in the LES. The dynamic formalism can be coupled with the Filtered Tabulated Chemistry for LES (F-TACLES) model, where the required cut-off length scales are tabulated in the F-TACLES table along with other filtered thermochemical variables. The coupling of the F-TACLES model with the dynamic formalism has been previously applied to premixed flames in the past, however, the formal extension to non-premixed flames has never been investigated. The objective of this project is to investigate the performance of the dynamic SGS flame surface wrinkling model coupled with the F-TACLES model for non-premixed flames. A priori tests are conducted on a 2D H2/Air reactive mixing layer and HYLON, a 3D turbulent dual-swirl coaxial H2/Air injector. In both 2D and 3D cases, the modelled flame surface density shows good agreement with the filtered flame surface density extracted from the DNS. Moreover, the variation of the fractal model exponent in the HYLON test case is significant, highlighting the importance of the dynamic procedure. A posteriori tests were also conducted, and modelled chemical reaction rates show promising results. | ||

==== C4: Developement of an automated virtual scheme generator for CFD - T. Luu, M. Hustache, N. Darabiha, B. Fiorina (EM2C) ==== | ==== C4: Developement of an automated virtual scheme generator for CFD - T. Luu, M. Hustache, N. Darabiha, B. Fiorina (EM2C) ==== | ||

| Line 221: | Line 235: | ||

=== User Experience & Data - L. Korzeczek, GDTech === | === User Experience & Data - L. Korzeczek, GDTech === | ||

| − | ==== U1: Refactoring the YALES2 tools - J. Leparoux, M. Cailler (Safran), L. Voivenel, J. Carmona, I. El Yamani ( | + | ==== U1: Refactoring the YALES2 tools - J. Leparoux, M. Cailler (Safran), L. Voivenel, J. Carmona, I. El Yamani (CORIA), S. Meynet, L. Korzeczek (GDTech) ==== |

The YALES2 distribution "tools" are becoming difficult to read and are a mixture of several types of tools. This leaves developers and end users unaware of what exists and how to use it (duplicated functions or tools) and makes it impossible to propose generic data analysis tools (FFT, confidence intervals, ...) that can be easily applied to YALES2 data structures. The main efforts have been concentrated on promoting a new architecture for YALES2 distribution tools with an object-oriented structure, including a refactored version of the main readers of YALES2 data. Several tutorials using Jupyter notebooks have been published for demonstration and explanation. A new CLI is now available under the name 'y2tools'. More work is needed before this structure can be pushed to the master trunk. | The YALES2 distribution "tools" are becoming difficult to read and are a mixture of several types of tools. This leaves developers and end users unaware of what exists and how to use it (duplicated functions or tools) and makes it impossible to propose generic data analysis tools (FFT, confidence intervals, ...) that can be easily applied to YALES2 data structures. The main efforts have been concentrated on promoting a new architecture for YALES2 distribution tools with an object-oriented structure, including a refactored version of the main readers of YALES2 data. Several tutorials using Jupyter notebooks have been published for demonstration and explanation. A new CLI is now available under the name 'y2tools'. More work is needed before this structure can be pushed to the master trunk. | ||

| − | ==== U2: Improved USEX for Multi-Scale Eulerian-Lagrangian simulation - L. Voivenel, J. Carmona, I. El Yamani ( | + | ==== U2: Improved USEX for Multi-Scale Eulerian-Lagrangian simulation - L. Voivenel, J. Carmona, I. El Yamani (CORIA) J. Leparoux, M. Cailler (Safran) ==== |

The multi-scale Eulerian-Lagrangian approach has now reached a certain maturity and is being used to simulate fuel spray atomization. Post-treatments of these multi-scale simulations require the development of specific tools that track liquid structures either described in an Eulerian or Lagrangian way. In this project, we implemented a strategy to register in a post-treatment particle-set all Eulerian droplets crossing an arbitrarily shaped surface (described with an interior-boundary). The strategy is based on artificial Eulerian droplet advancement (using a Lagrangian representation) and verification of the new Eulerian droplet position compared to the surface of interest. We used this strategy to build a new post-treatment that allows to track both Eulerian and Lagrangian structures and build particle size or velocity distributions. | The multi-scale Eulerian-Lagrangian approach has now reached a certain maturity and is being used to simulate fuel spray atomization. Post-treatments of these multi-scale simulations require the development of specific tools that track liquid structures either described in an Eulerian or Lagrangian way. In this project, we implemented a strategy to register in a post-treatment particle-set all Eulerian droplets crossing an arbitrarily shaped surface (described with an interior-boundary). The strategy is based on artificial Eulerian droplet advancement (using a Lagrangian representation) and verification of the new Eulerian droplet position compared to the surface of interest. We used this strategy to build a new post-treatment that allows to track both Eulerian and Lagrangian structures and build particle size or velocity distributions. | ||

Latest revision as of 10:26, 6 March 2024

Contents

- 1 Description

- 2 Agenda

- 3 Thematics / Mini-workshops

- 4 Projects

- 4.1 Hackathon GENCI - P. Begou, LEGI

- 4.2 Mesh adaptation - R. Letournel, Safran

- 4.2.1 M1: ASMR for reheat chamber applications - Paul Pouech (CERFACS), Thibault Duranton, Luis Carbajal Carrasco (Safran)

- 4.2.2 M2: Parallel remeshing - B. Andrieu, C. Benazet, K. Hoogveld, B. Maugars, E. Quémerais (ONERA)

- 4.2.3 M3: Anisotropic mesh refinement - R. Barbera (LEGI/Safran), G. Ghigliotti, G. Balarac (LEGI), R. Letournel (Safran)

- 4.3 Numerics - S. Mendez, IMAG & G. Balarac, LEGI

- 4.3.1 N1: Treatment of boundary conditions for high-order schemes - M. Bernard & G. Balarac (LEGI), G. Lartigue (Total Energies)

- 4.3.2 N2: Implementation of linearised implicit time integration in ALE solver - T. Berthelon, G. Balarac (LEGI)

- 4.3.3 N3: Parallelisation of Actuator Line Method - H. Mulakaloori (CORIA), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE)

- 4.3.4 N4: Non-uniform outlet pressure and coupling with CWIPI - J. B. Lagaert (LMO), Y. Lakrifi, T. Berthelon, G.Balarac (LEGI) , R. Letournel (Safran)

- 4.3.5 N5: Optimization of the RBC solver - F. Rojas, S. Mendez (IMAG)

- 4.3.6 N6: Electrodeformation of red blood cells, extension to 3D and improved accuracy at membrane - A. Spadotto, S. Mendez (IMAG), M. Bernard (LEGI)

- 4.3.7 N7: Optimisation Dorothy - M. Roperch, G. Pinon (LOMC), B. Gaston (CRIANN), P. Benard (CORIA)

- 4.4 Turbulence - P. Benard, CORIA & L. Bricteux, UMONS

- 4.4.1 T1: Wall Law for immersed boundaries – P. Bénez (CORIA), M. Cailler (Safran), S. Meynet (GDTech), J. Carmona (CORIA), Y. Bechane (CORIA)

- 4.4.2 T2: Turbulence injection Compressible flows – P. Tene Hedje (UMONS), J. Carmona (CORIA), Y. Bechane (CORIA), L. Bricteux (UMONS)

- 4.4.3 T3: Aero-servo-elastic simulations of wind turbines including atmospheric effects – E. Muller (SGRE), U. Vigny (UMONS), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE)

- 4.4.4 T4: Atmospheric solver – U. Vigny (UMONS), L. Voivenel (CORIA), S. Zeoli (UMONS), P. Benard (CORIA)

- 4.4.5 T5: Implementation of the RVMs-WALE model in YALES2 – L. Bricteux (UMONS), P. Benard (CORIA), Y. Bechane (CORIA)

- 4.4.6 T6: Development of coupling between YALES2-OpenFAST – A. Parinam (TUDelft/CORIA), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE)

- 4.4.7 T7: Confidence intervals for estimators – C. Papagiannis, G.Balarac (LEGI), R. Letournel (Safran)

- 4.5 Two Phase Flow - M. Cailler, Safran & V. Moureau, CORIA

- 4.5.1 P1: Level set reinitialization at the contact line for boiling flows - H. Lam, M. Benard, G. Ghigliotti (LEGI)

- 4.5.2 P2: Compatibility of Boiling solver with PCS and MPH structure - H. Lam, M. Benard, G. Ghigliotti (LEGI)

- 4.5.3 P3: Blood platelets adhesion model - C. Raveleau, S. Mendez, F. Nicoud (IMAG)

- 4.5.4 P4: vWF Unfolding - C. Raveleau, S. Mendez, F. Nicoud (IMAG)

- 4.5.5 P5: Towards even more efficient particle algorithms - M. Helal (CORIA/Safran), M. Cailler (Safran)

- 4.5.6 P6: Two fluid and phase change in PCS - C. Merlin (Ariane Group), J. Carmona (CORIA), V. Moureau (CORIA)

- 4.5.7 P8: Wall liquid film numerical model - N. Gasnier (EM2C/Safran), J. Leparoux (Safran), J. Carmona (CORIA)

- 4.5.8 P9: Casting simulation for the study of ceramic core displacement - S. Sirot, R. Mercier, M. Cailler (Safran), S. Meynet (GDTech)

- 4.5.9 P10: Velocity regularization for Euler-Lagrange conversion - I. El Yamani (CORIA/Safran), M. Cailler (Safran), L. Voivenel, J. Carmona (CORIA)

- 4.6 Combustion - K. Bioche, CORIA & R. Mercier, Safran

- 4.6.1 C1: Plasma discharge models for reacting system - S. Wang, B. Kruljevic, B. Fiorina (EM2C), Y. Bechane (CORIA)

- 4.6.2 C3: Dynamic sub-grid-scale wrinkling model for diffusion flames - S. Dillon (EM2C/Safran), R. Mercier (Safran), E. Espada, B. Fiorina, D. Veynante (EM2C)

- 4.6.3 C4: Developement of an automated virtual scheme generator for CFD - T. Luu, M. Hustache, N. Darabiha, B. Fiorina (EM2C)

- 4.6.4 C5: Partially-Stirred reactor model for MILD combustion - E. Stendardo, L. Bricteux (UMONS), M. Laignel, K. Bioche (CORIA), J. Blondeau (VUB)

- 4.6.5 C6: Static Mesh Adaptation for Hydrogen High pressure combustion using GPUs - G. Hexilar, C. Brunet, R. Mari, S. Richard (Safran), P. Pouech, Q. Douasbin, G. Staffelbach (Cerfacs)

- 4.6.6 C7: High fidelity simulation of a cone calorimeter - A. Grenouilloux, K. Bioche (CORIA), N. Dellinger (ONERA), R. Letournel (Safran)

- 4.7 User Experience & Data - L. Korzeczek, GDTech

- 4.7.1 U1: Refactoring the YALES2 tools - J. Leparoux, M. Cailler (Safran), L. Voivenel, J. Carmona, I. El Yamani (CORIA), S. Meynet, L. Korzeczek (GDTech)

- 4.7.2 U2: Improved USEX for Multi-Scale Eulerian-Lagrangian simulation - L. Voivenel, J. Carmona, I. El Yamani (CORIA) J. Leparoux, M. Cailler (Safran)

- 4.7.3 U3: Evaluate technological debt - P. Pouech, T. Marzlin, A. Dauptain (CERFACS)

- 4.7.4 U4: CWIPI 1.0 porting - N. Dellinger, B. Andrieu, K. Hoogveld, E. Quémerais (ONERA), A. Grenouilloux (CORIA), R. Letournel (Safran)

- 4.7.5 U5: Integration of YALES2 in PRESTO supervisor - A. Pushkarev (GE Vernova), G. Balarac (LEGI)

- 4.7.6 U6: Optimization of YALES2 compilation time - R. Mercier (Safran), G. Lartigue (Total Energy)

Description

- Event from 22th of January to 2nd of February 2024

- Location: Hôtel Club de la Plage, Merville-Franceville, near Caen (14)

- Two types of sessions:

- common technical presentations: roadmaps, specific points

- mini-workshops. Potential workshops are listed below

- Free of charge

- More than 70 participants from academics, HPC center/experts and industry.

- Objectives

- Bring together experts in high-performance computing, applied mathematics and multi-physics CFDs

- Identify the technological barriers of exaflopic CFD via numerical experiments

- Identify industrial needs and challenges in high-performance computing

- Propose action plans to add to the development roadmaps of the CFD codes

- Organizers

- Guillaume Balarac (LEGI), Simon Mendez (IMAG), Pierre Bénard, Vincent Moureau, Léa Viovenel (CORIA).

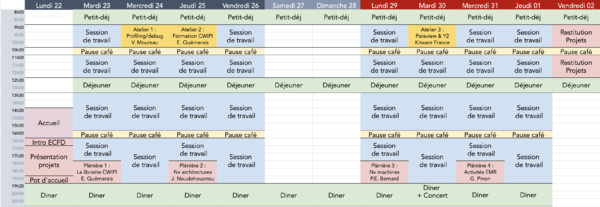

Agenda

Thematics / Mini-workshops

These mini-workshops may change and cover more or less topics. This page will be adapted according to your feedback.

To come...

Projects

Hackathon GENCI - P. Begou, LEGI

The GENCI Hackathon will be devoted to porting two CFD codes to the Mi250 GPUs of the Adastra supercomputer deployed by GENCI at CINES.

For the YALES2 code the goal is to obtain a first reference version giving the expected results then, if possible, to start its optimization to gain performance. The approach is OpenACC based with the objective of an implementation as least intrusive as possible in the existing code and which remains portable with the work done on the Nvidia GPUs of the Jean-Zay supercomputer at IDRIS.

The porting of the AVBP code is more advanced with a prototype already functional on Adastra but "hard-coded". The objective is to rationalize this first implementation, to integrate the latest developments in the code, to centralize memory management (host and device), to work on porting the Lagrangian part of the code and, of course, to improve the global performance.

This Hackathon is supported by GENCI, HPE, AMD and CINES with the presence on site of several development experts on AMD GPUS.

Mesh adaptation - R. Letournel, Safran

M1: ASMR for reheat chamber applications - Paul Pouech (CERFACS), Thibault Duranton, Luis Carbajal Carrasco (Safran)

Combustion in reheat chambers feature a wide range of lenght scales. Mesh refinement is thus mandatory to capture the flow characteristics within a reasonnable CPU cost for LES computations using the AVBP code. The purpose of this project is to consolidate mesh refinement criteria and strategy in an academic reference case. The retained workflow is supported by the Lemmings code that calls the Tékigô wrapper for the mesh adaptations. During the ECFD7, the convergence time needed to have significant distribution of quantities of interest was analysed. An optimum runtime, based on a characteristic flow time-scale, was thus identified and led to a reduced running time for each adaptation step. As a second step, discussions with the ECFD7 participants led to the identification of interesting refinement criteria, namely the flame sensor or the mach rms for instance. Parametric analysis showed the robustness of the workflow based on a ponderation of different criteria. Finally, in order to facilitate the use of the workflow, efforts were made to improve the user experience by making it more human readable.

M2: Parallel remeshing - B. Andrieu, C. Benazet, K. Hoogveld, B. Maugars, E. Quémerais (ONERA)

Mesh adaptation is a crucial tool in order to automate industrial RANS numerical simulations. To meet this need, we need to carry out mesh adaptation as quickly as possible by setting up an efficient, parallel solution. To this end, we have explored two avenues: a parallel edge-splitting algorithm that has recently been initiated in the ParaDiGM library, and a solution based on the refine library for adapting meshes with MPI implementation. On the one hand, we fixed several bugs in our split operator, and validated it on test cases of increasing complexity with a node-centered solver. In addition, we've added interfaces to refine so as to avoid using files, and call directly in library mode. We also investigated geometric projection issues during the mesh adaptation procedure, notably by looking at solutions such as EGADS, which offers a simplified API for CAD interrogation. We finally implemented metric gradation (in serial), metric intersection and complexity computations. All the ingredients we've tested give us a clearer picture of the entire mesh adaptation process.

M3: Anisotropic mesh refinement - R. Barbera (LEGI/Safran), G. Ghigliotti, G. Balarac (LEGI), R. Letournel (Safran)

Mesh adaptation is now a key feature for simulations of complex industrial flows. For transient flows such as multiphase and/or reactive flows, where regions of interest are strongly moving in space, dynamic mesh adaptation appears as the most suitable strategy. This strategy is now widely used in YALES2 based on isotropic mesh definition. The purpose of this project is to adapt this strategy to an anisotropic framework to reduce the overall simulation costs (in term of memory consumption, cpu cost and time to solution). In order to be able to handle multiphase flows, the main objective of the project is to study the conditions for accurately describing the dynamics of the level-set function with an anisotropic mesh. Accuracy is mainly assessed in terms of interface position and mass conservation. The inaccuracy of mass conservation is mainly due to interpolation errors after the adaptation step. Furthermore, inaccuracy in interface position may be due to misalignment between the anisotropic mesh elements and the interface normal. The first methodological corrections have been proposed, as an adaptation of the level-set reinitialization algorithm to the anisotropic mesh.

Numerics - S. Mendez, IMAG & G. Balarac, LEGI

N1: Treatment of boundary conditions for high-order schemes - M. Bernard & G. Balarac (LEGI), G. Lartigue (Total Energies)

In the context of Finite Volumes Method, spacial accuracy of a numerical scheme depends on ability to evaluate accurately fluxes through interface of each control volume (CV). Such accurate evaluation is not straightforward, especially when dealing with distorted grids. This project follows the work of [1] where fluxes use pointwise quantities, which are reconstructed from integrated quantities advanced in time. During the workshop, task force was dedicated to the treatment of **inlet** boundary conditions (BC) and **non-planar walls**. For inlet BC, the key resides in the spatial integration of convective flux over discrete faces of the CV touching the boundary. Such treatment lead to exact integration for linear inlet profile and large error reduction on other profiles. Concerning non-planar walls, the strategy adopted consists in the enforcement of the BC on each discrete face, by modifying the normal component of the wall gradient in order to evaluate accurately the diffusive flux. Again, a large reduction of this error has been observed.

[1] A framework to perform high-order deconvolution for finite-volume method on simplicial meshes, , Bernard et. al., IJNMF 2020

N2: Implementation of linearised implicit time integration in ALE solver - T. Berthelon, G. Balarac (LEGI)

An linearised implicit time integration has recently been developed in the incompressible solver of YALES2. This new integration scheme allows to use larger time-step that the ones constraints by classic stability criteria inherent to explicit time integration method. This allows to reduce the restitution time of Large Eddy Simulations [1]. The objective of this project was to implement this new time integration in the ale solver in order to be able to reduce restitution time of moving mesh configuration.

The developments were validated on a scalar advection case and on a rotor-stator interaction case. Although the results seem to be in line with the explicit integration methods, the validation of the temporal convergence to 2nd order remains to be shown.

[1] Toward the use of LES for industrial complex geometries. Part II: Reduce the time-to-solution by using a linearised implicit time advancement, Berthelon et al., JoT, 2023

N3: Parallelisation of Actuator Line Method - H. Mulakaloori (CORIA), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE)

The implementation of the Actuator Line Method (ALM) into the YALES2 library leads to poor performances when many wind turbine rotors are set. Indeed, each rotor object is a derived type treated sequentially by all the processors participating to the computation. With 30 turbines in a computation, the return time is increased by 70% while the arithmetic intensity appears to be low. The objective of this sub-project is to improve the computation performances of the ALM already identified: (i) Assign one MPI communicator by rotor object gathering the processors close to the turbine and set-up a master/slave processus by communicator. This will allow the simultaneous rotors computation and reduce the number of MPI exchanges. (ii) Work on the domain decomposition to limit the number of processors attributed to each turbine. This would reduce or even eliminate MPI communications.

N4: Non-uniform outlet pressure and coupling with CWIPI - J. B. Lagaert (LMO), Y. Lakrifi, T. Berthelon, G.Balarac (LEGI) , R. Letournel (Safran)

In simulations, artificial boundaries need to be introduced due to the limited size of computational domains. At these boundaries, flow variables need to be calculated in a way that will not induce any perturbation of the interior solution. During ECFD#7, a generic outlet boundary condition defined from non-uniform pressure has been implemented in Yales2. This non-uniform pressure can de determined from a traction model (null or advected from the interior domain, for example). This non-uniform pressure can also be deducted through a coupling between two simulations. In this case a coupling via CWIPI is performed where the velocity and the pressure are exchanged at the common boundary to define the inlet and outlet conditions, respectively.

N5: Optimization of the RBC solver - F. Rojas, S. Mendez (IMAG)

In the study of blood diseases, the mechanical behaviour of Red Blood Cells (RBCs) is one of the most relevant effects to take into account in the numerical models but also in experimental setups. Our system of interest is the thin gap of a rheometer where RBC suspensions are placed to explore their properties. To interpret the experimental data, the simulations of large suspensions of RBC are required to determine the blood’s microstructure (spatial arrangement of cells) and its rheological properties.

Currently, YALES2BIO’s RBC solver is capable to manage thousands of cells, but in order to approach closer to the experimental scales, we propose the characterisation and optimisation of its performance to reduce the computational requirements and increase the RBC’s number and domain sizes in our simulations. During the workshop a parametric study was carried out to obtain the strong and weak scaling. Studying the increase in the volume fraction allowed us to quantify how the cost of the simulation increases rapidly with the RBC’s number and identify which routines have the biggest impact on the performance. One conclusion is that the cost is spread of several routines, which makes code optimization more cumbersome. However, the amount of RBCs and RBC nodes duplicated over processors is identified as a key factor for performance. Indeed, as RBCs may interact with several partitions, it is duplicated as much as needed based on criteria of boundaing box intersections. However, the current criteria have been shown to be too loose.

In order to limit the amount of work during the RBC processing, stricter criteria were introduced to avoid unnecessary calculations at the level of the nodes with a small gain in performance. On the other hand, much better results were obtained using cartesian partitioning to optimise the bounding box of each processor, reducing the involved RBC operations: this demonstrates that the performances of the RBC solver may be optimized by a stricter selection of RBC duplicates over processors.

We thank Ghislain Lartigue and Renaud Mercier for helpful discussions.

N6: Electrodeformation of red blood cells, extension to 3D and improved accuracy at membrane - A. Spadotto, S. Mendez (IMAG), M. Bernard (LEGI)

The Leaky Dielectric Model is a popular framework to describe electric stresses over micro-scale membranes. We have adopted it to simulate the effect of a DC electric field on a red blood cell using the YALES2BIO solver. The goal of the project is to reproduce the electric charging process of the membrane, as well as the resulting stresses, which may yield to electrodeformation of the cell. From the point of view of the implementation, the grid is represented by a 2D surface mesh embedded in a 3D eulerian grid. The need to make variables stored on the surface interact with quantities stored on the Eulerian grid calls for a proper bidirectional 2D-membrane/3D-grid dynamic connectivity. The advancement of theis task during this ECFD has led to the first 3D simulation of a charging fixed spherical shell. Moreover, the estimation of grid variables on elements cut by the membrane has been improved thanks to a High-Order extrapolation. The latter has been successfully tested on 2D configurations. The project opens the way for a series of validation tests. In particular, future work will demand treatment of instabilities emerging in symmetrical configurations.

N7: Optimisation Dorothy - M. Roperch, G. Pinon (LOMC), B. Gaston (CRIANN), P. Benard (CORIA)

Dorothy is a Lagrangian code using the particle vortex method. This method must have a homogeneous distribution of particles in space. To achieve this, at regular intervals during the simulation a Cartesian grid with new particles is created. The weights of the old particles are interpolated for each of the new particles. Before ECFD7, all the processors knew the general grid and the new particles. The aim of ECFD was to parallelize this module to avoid memory problem. To do this, each processor creates a grid corresponding to the particles it knows. They then exchange data on the supperposition zones. This solves the issue because the quantity of new particles known is smaller. During ECFD7, a trial on a ring vortex case was successfully carried out to test domain communications and supperposition. The next step will be to implement this new method in the Dorothy code.

Turbulence - P. Benard, CORIA & L. Bricteux, UMONS

T1: Wall Law for immersed boundaries – P. Bénez (CORIA), M. Cailler (Safran), S. Meynet (GDTech), J. Carmona (CORIA), Y. Bechane (CORIA)

Conservative Lagrangian Immersed Boundaries (CLIB) are now a useful way to take into account complex geometries in YALES2. In order to study highly turbulent configurations, it appears necessary to implement wall law models adapted to this method. If we consider a non-moving immersed body, developing wall-law models in a conservative immersed boundary formalism presents numerous challenges related to the diffuse interface property of the solid and the continuous formulation of the penalty force. During the ECFD, a new formulation of the penalty force has been established to ensure the imposition of the wall shear stress across the immersed solid interface. A strategy based on the use of two near-wall level sets was implemented to estimate the wall shear stress from the LES fluid velocity field at a distance D from the solid interface. At the end of the ECFD, turbulent flat plate cases were set up to start the validation of the strategy implemented for a logarithmic wall law. Future works will focus on validating this strategy for fixed solids.

T2: Turbulence injection Compressible flows – P. Tene Hedje (UMONS), J. Carmona (CORIA), Y. Bechane (CORIA), L. Bricteux (UMONS)

Turbulence injection for compressible flows remains a real challenge. Indeed, In these types of flow, the acoustic waves must also be controlled on boundaries. In addition, the non-reflective formulation of the Navier-Stokes characteristic Boundary Conditions (NSCBC) generally used in compressible solvers produce spurious pressure oscillations when applied to turbulent flows, making turbulence injection difficult for such applications. During the ECFD, two turbulence injection approaches were investigated and applied within the framework of the Explicit compressible solver (ECS) of YALES2. The first involved modifying the NSCBC formulation to inject turbulence from the inlet of the domain. To this end, the vortical-flow characteristic boundary condition [1] was implemented in ECS and the first validations were performed. The second was to use AL to generate a turbulence grid in the flow [2]. Future works will focus on further validating these approaches.

[1] Guézennec et al., Acoustically nonreflecting and reflecting boundary conditions for vortcity injection in compressible solvers, AIAA journal, 47(7), 1709-1722, 2009.

[2] Houtin-Mongrolle et al., Actuator line method applied to grid turbulence generation for large-Eddy simulations, Journal of Turbulence, 21(8), 407-433, (2020).

T3: Aero-servo-elastic simulations of wind turbines including atmospheric effects – E. Muller (SGRE), U. Vigny (UMONS), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE)

Aero-servo-elastic engineering solvers used in the industry (i.e., BHawC) for structural response and power assessments are unsuited for wake simulations, as aerodynamic loads are usually derived from a BEM-like method. To tackle this, the YALES2 library was coupled (P11-ECFD3) to BHawC, the Siemens Gamesa Renewable Energy (SGRE) in-house certification code for wind turbines. This allowed the investigation of neutral atmospheric conditions. This project aims to include stable and unstable atmospheric conditions into this coupling based on the development done in T4-ECFD7. Therefore, this project is divided into three work packages: Work package 1: Adjustment and refactoring of the existing coupling library between YALES2 and BHawC. Work package 2: Rethink how turbulence is injected into the simulation (recycling in SGRE setup) to consider thermal and Coriolis effects. Work package 3: Adapt how the blade forces are computed in the coupling to consider the resulting density fluctuations.

T4: Atmospheric solver – U. Vigny (UMONS), L. Voivenel (CORIA), S. Zeoli (UMONS), P. Benard (CORIA)

Wind turbines, bigger and bigger, are now influenced by atmospheric flows. An atmospheric solver has already been developed in YALES2 to represents some of its effects (Coriolis, veer, thermal stratification). In this continuum, the project has been divided into two work-packages. - Work-package 1: The use of the Variable density solver (VDS). Before ECFD7, thermal stratification was taken into account using the Boussinesq buoyancy approximation within the incompressible solver framework. Now, VDS can be used, taking into account all thermal effect. Results are promissing. - Work-package 2: Wall law velocity filtering. Wall law are using velocity at the first grid node to compute wall shear stress. Before ECFD7, atmospheric wall law were using the local velocity, leading sometimes to convergence errors. Now a gather-scatter filter can be used to average velocity (and temperature) at first grid node.

T5: Implementation of the RVMs-WALE model in YALES2 – L. Bricteux (UMONS), P. Benard (CORIA), Y. Bechane (CORIA)

This study focused on the implementation of an advanced multiscale variational subgrid-scale model, incorporating scaling based on the WALE (Wall-Adapting Local Eddy-viscosity) model within YALES2. This model has demonstrated efficiency across various flow configurations, and it is anticipated that its multiscale nature can enhance the spectral selectivity. The aim is to ensure that its dissipative effects specifically target the smallest scales near the cut-off point.

Additionally, collaborative work with G. Balarac aimed to enhance the mesh adaptation strategy for wall-bounded flows with massive boundary layer detachment and vortical wake. This new strategy based on vortex detection was developed during the ECFD6 and ECFD4 workshops. We have now shown that this strategy is effective. Flow simulations around a hemisphere at Reynolds number Re=55K have been conducted, and we anticipate publishing the results soon."

T6: Development of coupling between YALES2-OpenFAST – A. Parinam (TUDelft/CORIA), P. Benard (CORIA), F. Houtin-Mongrolle (SGRE)

Aero-servo-elastic engineering solvers, commonly used in the wind energy industry for structural response and power assessments, are unsuited for wake simulations, as aerodynamic loads are usually derived from a BEM-like method. To tackle this, the target is to couple the YALES2 library to OpenFAST, an NREL code for wind turbines, in the same way as the already existing YALES2-BHawC coupling. An external coupling library has been created, linking the YALES2 and OpenFAST libraries and enabling the exchange of information between the data structures of each code. This data exchange has been tested and validated during the ECFD7. The next steps rely on exchanging the proper data during the actuator line tilmestep and further validate the coupling.

T7: Confidence intervals for estimators – C. Papagiannis, G.Balarac (LEGI), R. Letournel (Safran)

The inference of statistics for the results of LES requires quantifying the quality of the estimates from the available sample. In the case of CFD the sample is the number of measurements of a QoI and is very closely connected to the simulation's time step. Since our computational resources are finite, the sampling error of the estimates will never vanish. The purpose of this project is to provide Confidence Intervals (CI) to the inferred statistics so the user can have an indicator of the quality level of the simulation 'on-the-fly'. This requires the calculation of the autocorrelation of the collected samples, to correct the estimated sample variance used for the CI. This was achieved through a selective autocorrelation function estimator that also takes into account the non-constant time steps. With this we calculated sample-size independent confidence intervals that provide the corrected variance, compared to a naive estimation of the sample variance that assumed the samples as fully uncorrelated. With this we pave the way for having a universal estimator for the autocorrelation of some QoI, that incorporates autocorrelations and cross-correlations with the time-step.

Two Phase Flow - M. Cailler, Safran & V. Moureau, CORIA

P1: Level set reinitialization at the contact line for boiling flows - H. Lam, M. Benard, G. Ghigliotti (LEGI)

In Savinien Pertant's PhD thesis (2022), DNS of nucleate boiling at the bubble scale were performed, but suffered some lack of accuracy in the imposition of the contact angle. Indeed, the contact angle was not well respected, with a difference of around 10 degrees between the desired angle and the angle measured on the solution. This lack of accuracy, that contrast with the accurate imposition obtained in the spray solver (SPS), is due to fluctuations of the contact line. This behavior that was traced back to the modifications of the level set reinitialisation needed to take correctly into account the triple line, and for which the solution applied in 2022 was to revert to the standard Janodet reinitialisation. S. Pertant tested, at the very end of its PhD, a correction which nullifies the temperature transport term at the first node from the wall of the contact line. This correction was introduced to overcome an instability of the code when the contact line velocity on the substrate changes direction, from receding to advancing. It turned out at the ECFD7 that this correction proves to be very efficient to stabilise the contact line for contact angles between 50 to 90 degrees even in the case of the use of the level set reinitialization. We were able to simulate nucleate boiling with a smooth contact line at the triple line and a precision in the angle of the contact angle of around +-0.5 degrees. More work remains to be able to run DNS of nucleate boiling for extreme contact angles (<50° and >100°). Moreover, longer runs will be needed to further confirm these results.

P2: Compatibility of Boiling solver with PCS and MPH structure - H. Lam, M. Benard, G. Ghigliotti (LEGI)

The boiling solver does not work since the introduction of MPH data structure and PCS solver in March 2022. An investigation work was carried out to understand the changes made between the previous and the new version of the different solvers. A simple test case was created to show potential differences between the working version of the code and the new one. Several problems were spotted: the order of level set declaration became important as it is the first one declared which is advected. Sign convention was chosen differently for the mass transfer rate. The temporal discretization of the level set was different. A test case with no flow and at an imposed mass transfer rate (i.e., no coupling of the level set with the temperature field) was run successfully and the results of the commit prior to the March 2022 modifications were retrieved. More work is needed to find the origin of the differences between the two solvers when the temperature field is solved and coupled with the level set and the velocity field. New common test cases for the two solvers will have to be implemented in order to cross-validate the results and avoid such cases happening again (i.e., cross-fertilization).

P3: Blood platelets adhesion model - C. Raveleau, S. Mendez, F. Nicoud (IMAG)

Medical devices in contact with blood (e.g. artificial valves) are used to treat various cardiovascular diseases, but their thrombogenicity remains the main unresolved issue in their development. A numerical model of blood platelets is being constructed to help to understand the effect of microstructuration on the thrombogenicity of artificial surface. The Force Coupling Method (FCM) was previously implemented and allows the modelisation of ellipsoidal particle and their interaction with the surrounding fluid. During the workshop, the particle model was extended to include adhesive and repulsive interactions with walls or with other particles. The adhesive bonds are modeled with springs forming when the distance between a node of a particle surface and a node of the wall or another particle is smaller than a given threshold. The stiffness of the bond is increased after a given formation time to mimic the 2-step adhesion process of platelets to von Willebrand Factor. A Lennard-Jones potential was used to model the collision of particles. Future work will aim at generalizing these implementations for an arbitrary number of particles (currently only working for 2 particles) and ensuring the interactions are unaltered by the crossing of a periodic boundary.

P4: vWF Unfolding - C. Raveleau, S. Mendez, F. Nicoud (IMAG)

Under certain circumstances, platelets do not bind to the surface but to a specific protein called Willebrand factor, which has the unique property of unfolding once subjected to a sufficiently high shear flow. The aim of this project was to investigate how to represent this mechanism within the YALES2 framework. During ECFD7, the immersed-boundary methodology already used to treat thin membranes (such as the red blood cell membrane) was extended to cover 1D elements evolving in a 3D flow. Preliminary tests have been successfully carried out, notably on a embedded beam immersed in shear flow, showing the potential of this approach to include another ingredient relevant to thrombosis modeling. Future work will include adding a repulsive force to avoid non-physical binding, as well as carrying out simulations involving Willebrand factor, platelets and red blood cells.

P5: Towards even more efficient particle algorithms - M. Helal (CORIA/Safran), M. Cailler (Safran)

Lagrangian particles are widely used in the YALES2 plateform to model: liquid spray, granular flow, two-phase flows with SPH approach or solids in IB method. Though important developments to handle efficiently high number of particles in massively parallel simulations, the growing use of particles in Yales2 make necessary to re-evaluate and optimize the performances of Lagrangian particles algorithms handling. Objective of this project was twofold: analyze and improve the performance and robustness of the newly developed SPH solver of YALES2 and improve the performance of the Lagrangian particle relocation (identification of connectivity between Lagrangian and Eulerian grid) during the Dynamic Mesh Adaptation. Regarding the first subject, profiling tools have been used to identify the hot-spots and bottle-necks in the SPH solver. Optimizations including code factorization, removal of string comparison allows to reduce the computational cost by a factor 3. Moreover, robustification of the solver was achieved. In the second sub-project, a new implicit 4th-level decomposition has been introduced. This implicit decomposition consists in contiguous coloring of sub-el_grp in element group. The availability of smallest group of elements has been used to improve the local particle relocation algorithm that mainly relies on bounding-box comparison. This new relocation algorithm has been tested for various number of sub-el_grp on a representative case of gear lubrication showing a decrease by a factor 3 to 5 of the relocation algorithm. Perspective is to extend the use of sub-el_grp to the interpolation algorithm.

P6: Two fluid and phase change in PCS - C. Merlin (Ariane Group), J. Carmona (CORIA), V. Moureau (CORIA)

P8: Wall liquid film numerical model - N. Gasnier (EM2C/Safran), J. Leparoux (Safran), J. Carmona (CORIA)

Wall liquid films are likely to be formed when fuel sprays impact the walls of aeronautical fuel injection systems. Such phenomenon may have a significant influence on the whole combustion process, however the small scales involved prevent from performing high fidelity simulations of film flows in the context of industrial geometries. Therefore, a low order model is required to model the dynamics of thin liquid flows under the action of spray droplets and of a turbulent gas shear. During ECFD7, a liquid film numerical model accounting for the influence of surface tension as well as gas shear, and based on the 2-dimensional Shallow Water Equations was implemented in Yales2. This model was then coupled to an algorithm ensuring a proper transition between fully resolved liquid structures (levelset) and film model during liquid droplet impacts on a solid wall.

P9: Casting simulation for the study of ceramic core displacement - S. Sirot, R. Mercier, M. Cailler (Safran), S. Meynet (GDTech)

Ceramic core displacement and deformation during the casting process is a major source of cooled blades manufacturing scrap. A possible source of core deformation may be the fluidic forces due to the filling of the mold with the liquid alloy. Predictive numerical simulations of the casting process would be an essential asset to increase the efficiency of the conception and industrial processes. During the workshop, a numerical methodology to simulate the filling process was drawn, with several modelling levels (with or without surface tension and slipping-wall conditions), in order to estimate the relevance of each of these models. Numerical results were then compared to available experimental results. Numerical deformation of the core was approximated as a beam flexion. Despite this post-processing approximation, the correlation between experimental measurements and numerical simulations is satisfying. The evolution of the core displacement with the inlet velocity of the fluid also has the same behaviour in the experiments and in the simulation. Future work will aim at including the dynamic contact angles in the simulations, in order to evaluate the relevance of this finer modelling, as well as correlating simulations with experiments on cases more representative of the industrial process.

P10: Velocity regularization for Euler-Lagrange conversion - I. El Yamani (CORIA/Safran), M. Cailler (Safran), L. Voivenel, J. Carmona (CORIA)

The Euler Lagrange multi scale approach aims to reduce the computational costs when simulating two phase flow. To reduce the cost even more, more droplets have to be converted in the Lagrangian formalism where droplets are seen as point forces. It implies that droplets can not always check the hypothesis of the LPP (Lagrangian Particle Point) formalism which is that the diameter of the particle has to be much smaller than the cell size. This hypothesis allows to have a good approximation of the undisturbed velocity for the Lagrangian particle. If the hypothesis is not checked when a Eulerian droplet is converted into a Lagrangian particle a residual velocity field can exists and therefore the velocity given to the particle is impacted by itself. This project aims to filter the gaseous velocity field through a gaussian filtering to remove the contribution of the Eulerian droplet to better approximate the undisturbed velocity.

Combustion - K. Bioche, CORIA & R. Mercier, Safran

C1: Plasma discharge models for reacting system - S. Wang, B. Kruljevic, B. Fiorina (EM2C), Y. Bechane (CORIA)

To reduce the expensive computational cost of Plasma-Assisted Combustion (PAC) full 3D simulations, the EM2C laboratory has developed phenomenological approaches to model Nanosecond Repetitively Pulsed (NRP) plasma discharges in reacting flows (Castela 2016 & Blanchard 2023). As part of previous works and ECFDs, both models were implemented and validated in the Low-Mach number framework (YALES2-VDS). While they were also implemented in the Compressible framework (YALES2-ECS), the validation against existing measurements or computations remained. During the workshop, numerical simulations of pin-to-pin configurations were performed with different numerical schemes and reactive mixtures to validate both models in ECS. The energy deposition was relatively well-validated through 2D simulations in the conditions of Castela et al. CNF 2016 and Rusterholtz et al. JPhysD 2013. A glimpse of baroclinic instabilities was observed through 3D simulations in the conditions of Castela et al. PROCI 2017.

C3: Dynamic sub-grid-scale wrinkling model for diffusion flames - S. Dillon (EM2C/Safran), R. Mercier (Safran), E. Espada, B. Fiorina, D. Veynante (EM2C)

Large-eddy-simulation (LES) of reactive flows is widely used in both academic and industrial applications. Combustion phenomena occur at a scale often smaller than the LES mesh size, therefore, turbulent combustion models are required to account for unresolved turbulent flame interactions. The modeling of sub-grid-scale (SGS) flame turbulence interactions can be described with a flame surface wrinkling factor which measures the ratio of the total flame surface area to the resolved flame surface area. Flame surface wrinkling models are often expressed by assuming equilibrium between turbulent motions and flame surface wrinkling, however, in realistic burners non-equilibrium is present and dynamic models are needed to adapt model parameters. Fractal-like models require information about the outer and inner cut-off length scales along with a fractal exponent, which is determined dynamically from resolved scales in the LES. The dynamic formalism can be coupled with the Filtered Tabulated Chemistry for LES (F-TACLES) model, where the required cut-off length scales are tabulated in the F-TACLES table along with other filtered thermochemical variables. The coupling of the F-TACLES model with the dynamic formalism has been previously applied to premixed flames in the past, however, the formal extension to non-premixed flames has never been investigated. The objective of this project is to investigate the performance of the dynamic SGS flame surface wrinkling model coupled with the F-TACLES model for non-premixed flames. A priori tests are conducted on a 2D H2/Air reactive mixing layer and HYLON, a 3D turbulent dual-swirl coaxial H2/Air injector. In both 2D and 3D cases, the modelled flame surface density shows good agreement with the filtered flame surface density extracted from the DNS. Moreover, the variation of the fractal model exponent in the HYLON test case is significant, highlighting the importance of the dynamic procedure. A posteriori tests were also conducted, and modelled chemical reaction rates show promising results.

C4: Developement of an automated virtual scheme generator for CFD - T. Luu, M. Hustache, N. Darabiha, B. Fiorina (EM2C)

In reactive CFD simulations, a non-negligible part of the time cost is spent in the resolution of the chemical system. Simplified chemistry models aim to reduce the number of transported species while still ensuring a correct representation of the phenomena of interest. Among them, the virtual chemistry method consists of using “virtual” species and reactions to reproduce detailed chemistry results through a mechanism of drastically smaller size. These “virtual” species and reactions are optimized to target quantities of interest such as temperature, laminar flame speed or pollutants. In practice, the optimization is done using a learning database composed of representative canonical reactive configurations computed with detailed chemistry. The objective of this project was to develop a tool to easily generate virtual schemes. The tool, named VISION (Virtual Scheme optimizatION), is currently able to both generate a user-defined database of wide reactive configurations and optimize a given scheme structure using either CANTERA or REGATH.

C5: Partially-Stirred reactor model for MILD combustion - E. Stendardo, L. Bricteux (UMONS), M. Laignel, K. Bioche (CORIA), J. Blondeau (VUB)

MILD combustion produces intense turbulence and extensive reaction zones, necessitating costly mesh refinement over large areas. Practical mesh lacks precision, leading to sub-grid heterogeneity and turbulent fluctuations. A Partially Stirred Reactor model was implemented to address turbulence-combustion interaction. This model multiplies the source term by a limiter factor, allowing modelling of residence time in the inner cell reactive structure. Testing various limiter formulations based on mixing and chemical timescales revealed increased computational costs. Future work aims to reduce costs by utilizing the model only where necessary. This ongoing research seeks to optimize performance while minimizing computational overhead for efficient application in engineering scenarios.

C6: Static Mesh Adaptation for Hydrogen High pressure combustion using GPUs - G. Hexilar, C. Brunet, R. Mari, S. Richard (Safran), P. Pouech, Q. Douasbin, G. Staffelbach (Cerfacs)